reinforcement learning classification

The ultimate goal in reinforcement learning is to learn an optimal policy which dictates the best action to take in every state...

Is it true that in reinforcement learning there are two types of algorithms: one related to model-free and the other not related to model-free? Furthermore, is there no such distinction in the case of deep reinforcement learning?🔗

You're right in observing that reinforcement learning (RL) algorithms are often categorized based on whether they are model-free or model-based. However, this distinction exists in both classical RL and deep reinforcement learning (DRL). Let me clarify:

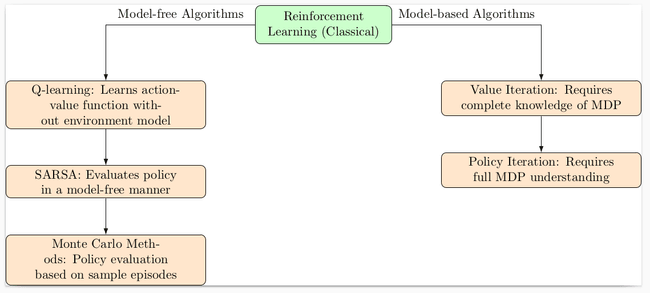

1. Reinforcement Learning (Classical):🔗

Model-free Algorithms:🔗

- Q-learning: Learns the action-value function without a model of the environment.

- SARSA: Another model-free method that learns to evaluate a policy.

- Monte Carlo Methods: Evaluates a policy based on sample episodes.

Model-based Algorithms:🔗

- Value Iteration & Policy Iteration: These methods require complete knowledge of the MDP, including transition probabilities and rewards.

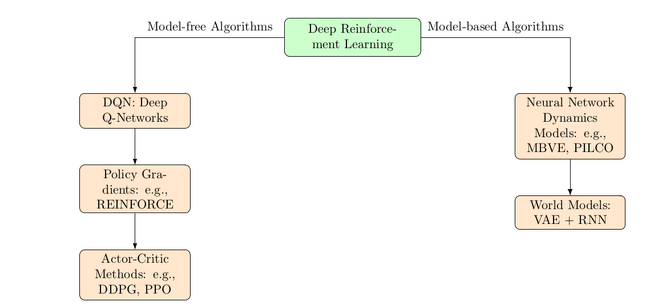

2. Deep Reinforcement Learning:🔗

In DRL, the distinction between model-free and model-based algorithms still exists, but the lines can sometimes blur due to the complexities introduced by neural network function approximators.

Model-free Algorithms in DRL:🔗

- DQN (Deep Q-Networks): Extends Q-learning using deep neural networks. Doesn't use a model of the environment.

- Policy Gradients (e.g., REINFORCE): Directly optimizes the policy without a model.

- Actor-Critic Methods (e.g., DDPG, PPO): These methods have both policy (actor) and value (critic) approximations, but they don't typically use a learned model of the environment.

Model-based Algorithms in DRL:🔗

- Neural Network Dynamics Models: These methods learn a model of the environment using a neural network. Once a model is learned, planning or trajectory optimization can be done in the learned model space. Algorithms like MBVE (Model-Based Value Expansion) and PILCO fall into this category.

- World Models: This is a famous approach where a variational autoencoder (VAE) and a recurrent neural network (RNN) are used to learn and predict the dynamics of the environment.

The distinction between model-free and model-based algorithms still exists in DRL. However, with the flexibility of deep learning, many hybrid approaches are emerging that combine the best aspects of both categories.

What is the distinction between model-free and model-based reinforcement learning, and can you provide comprehensive examples for each?🔗

The ultimate goal in reinforcement learning is to learn an optimal policy, which dictates the best action to take in every state to maximize expected rewards over time. However, how we approach learning this policy can differ based on whether we use a model-free or model-based method. Let's clarify:

Model-Based Reinforcement Learning:🔗

-

Learning the Model:

- First, the agent learns a model of the environment, either from data or it's given the model.

- This model predicts the next state and reward for a given state-action pair.

-

Policy Learning using the Model:

- Once a model is available, the agent can simulate many possible future trajectories (sequences of states, actions, and rewards) using this model without actually interacting with the real environment.

- By simulating these trajectories, the agent can evaluate different actions and use this information to learn or improve its policy.

Model-Free Reinforcement Learning:🔗

- Learning the Policy Directly:

- The agent learns the policy (or a value function from which the policy is derived) directly from its interactions with the environment.

- There's no explicit model of the environment's dynamics. Instead, the agent updates its policy (or value function) based on the rewards it observes.

Both approaches aim to learn a good or optimal policy, but they differ in their methods:

-

Model-Based: "I'll first try to understand (model) how the environment works. Then, using this understanding, I'll figure out the best actions to take."

-

Model-Free: "I'll directly learn the best actions based on the rewards I receive, without trying to explicitly understand the environment's dynamics."

In practice, while model-free methods like Q-learning and policy gradients are widely used, especially in deep reinforcement learning, model-based methods can be more sample-efficient since they can "think ahead" using the learned model without needing actual interactions for each decision. However, the quality of the learned policy in model-based methods is heavily dependent on the accuracy of the learned model.

COMING SOON ! ! !

Till Then, you can Subscribe to Us.

Get the latest updates, exclusive content and special offers delivered directly to your mailbox. Subscribe now!